The Double-Edged Sword of ChatGPT: How Threat Actors Could Use It for Evil

With the development of large language models like ChatGPT, it's becoming increasingly easier for cybercriminals to create more sophisticated phishing emails. ChatGPT, or Chat Generative Pretrained Transformer, is a state-of-the-art natural language processing model that is capable of generating human-like text based on a given prompt. This technology has the potential to revolutionize the way we interact with machines, but it also presents a unique set of challenges when it comes to cybersecurity. In this blog post, we will explore how ChatGPT could be used by cybercriminals to develop more convincing phishing emails, and what we can do to protect ourselves from these types of attacks.

As the capabilities of large language models like ChatGPT continue to improve, it is likely that we will see an increase in the number of sophisticated phishing attacks. Cybercriminals will be able to generate highly convincing emails that are tailored to individual recipients, making it even harder for people to detect and defend against these attacks. In addition, ChatGPT and other language models could potentially be used to automate the entire phishing process, from generating the initial email to collecting and storing the stolen information.

The potential implications of this technology for cybersecurity are alarming. If cybercriminals are able to use ChatGPT and other large language models to create highly convincing phishing emails, it could lead to a significant increase in the number of successful attacks. This could result in widespread financial losses and damage to individuals' and organizations' reputations. It is important that we take steps to protect ourselves against these types of attacks.

Impressive, right? But I have to admit, I didn't write any of that. Everything above was written by ChatGPT after I asked it to "write the introduction of a blog post about how ChatGPT could be used by cybercriminals to develop more sophisticated phishing emails." Yikes.

Using ChatGPT for Business Email Compromise

If you've spent any time fiddling with ChatGPT, you know how much of an absolutely transformational technology it has the capability of becoming. With it, you can write college-level essays, generate code in various computer languages, and easily answer complex questions without the need to traverse pages of Google search results. At its core, it has the potential to make our work much more efficient.

But all technology can be used by bad actors and with its tremendous number of benefits, there's always a possibility ChatGPT could be abused for malicious purposes. So how could it be exploited by cybercriminals to develop more sophisticated cyber threats?

Currently, many BEC actors rely on “formats”—or templates—when they launch their BEC campaigns. Because of this, many BEC attacks share common content that can be used by security tools to detect and block them. ChatGPT, however, could allow scammers to craft unique content based off of an initial format, making detection based on known malicious text string matches much more difficult. To prove this, we asked ChatGPT to write five new variations of a format that's commonly used in payroll diversion BEC attacks. As a result, it generated a list of new options that were all significantly unique from one another. And they were given to us in less than a minute.

ChatGPT could also be used to significantly increase the overall sophistication of social engineering attacks, making victims more susceptible to falling for them. We ran a test and asked ChatGPT to “write a very persuasive social engineering email requesting a wire transfer to be paid to a supplier with a convincing backstory.” The resulting email was much more sophisticated than most of the real attacks we see every day. The email contains a plausible story (overpaid a vendor due to an error), demonstrates empathy (“I understand wire transfers can be a hassle...“), and is written without any of the grammatical or spelling errors we teach users to watch for.

Another unintended consequence of ChatGPT is that it could provide feedback to malicious actors to help them better understand why and how certain tactics can be used successfully—teaching them how to craft more effective phishing emails on their own. For example, we asked ChatGPT to create an email that had a high likelihood of getting a recipient to click on a link. The resulting message looked very similar to many credential phishing emails we see at Abnormal. When we followed up with a question asking the bot why it thought the email would have a high success rate, it returned a lengthy response detailing the core social engineering principles behind what makes the phishing email effective.

None of these examples were particularly hard to create, and ChatGPT took hours off the time it may take to write these emails and run them through grammatical checkers to determine their likelihood of success. There is little denying that tools like this can be incredibly useful for everyday life, but this short experiment shows just how they can also be used as bad tools.

Using AI to Protect Against AI

So what can we do about the malicious activity that may stem from ChatGPT? The good news is there are defenses available today that are still able to detect more sophisticated emails enhanced by the capacities that ChatGPT and other similar large language models can provide. While defenses that rely on static indicators, including text strings, would be impacted by a shift to more unique content in BEC emails, defenses that employ behavioral analysis to identify threats would still likely be effective in defending against these attacks.

Unfortunately, more sophisticated BEC emails may have a higher success rate if they reach their targets, underscoring the importance of using technology to stop these attacks. The right tools, ones that leverage behavioral and relationship analysis, such as contextual identification of a suspicious financial request or the identification of an impersonated sender, would still be triggered and identify these as malicious—no matter who wrote them.

But don't just take my word for it, let's see what ChatGPT has to say:

Behavioral analysis is a type of email defense that looks at the behavior of the sender and recipient, rather than just looking for specific indicators of a phishing email. This can be more effective at detecting sophisticated phishing emails developed by large language models, like ChatGPT, because these models are able to generate realistic and plausible messages that may not contain the typical indicators of a phishing email.

By looking at the behavior of the sender and recipient, behavioral analysis can identify anomalies and suspicious patterns that may indicate a phishing attack, even if the email itself does not contain any obvious indicators of one. Additionally, because large language models like ChatGPT can generate text that is difficult for humans to distinguish from real human-written text, traditional methods of detecting phishing emails, such as keyword filtering and spam filters, may not be effective against these types of attacks. In contrast, behavioral analysis can help identify these sophisticated phishing emails by looking for patterns of behavior that are indicative of a phishing attack, regardless of the specific words used in the email.

So what does all this mean? New security tools will become more important than ever in the coming years, as ChatGPT and tools like it expand. To better protect your organization from these types of attacks, now is the time to implement a behavioral AI-based tool—before ChatGPT takes your users by surprise.

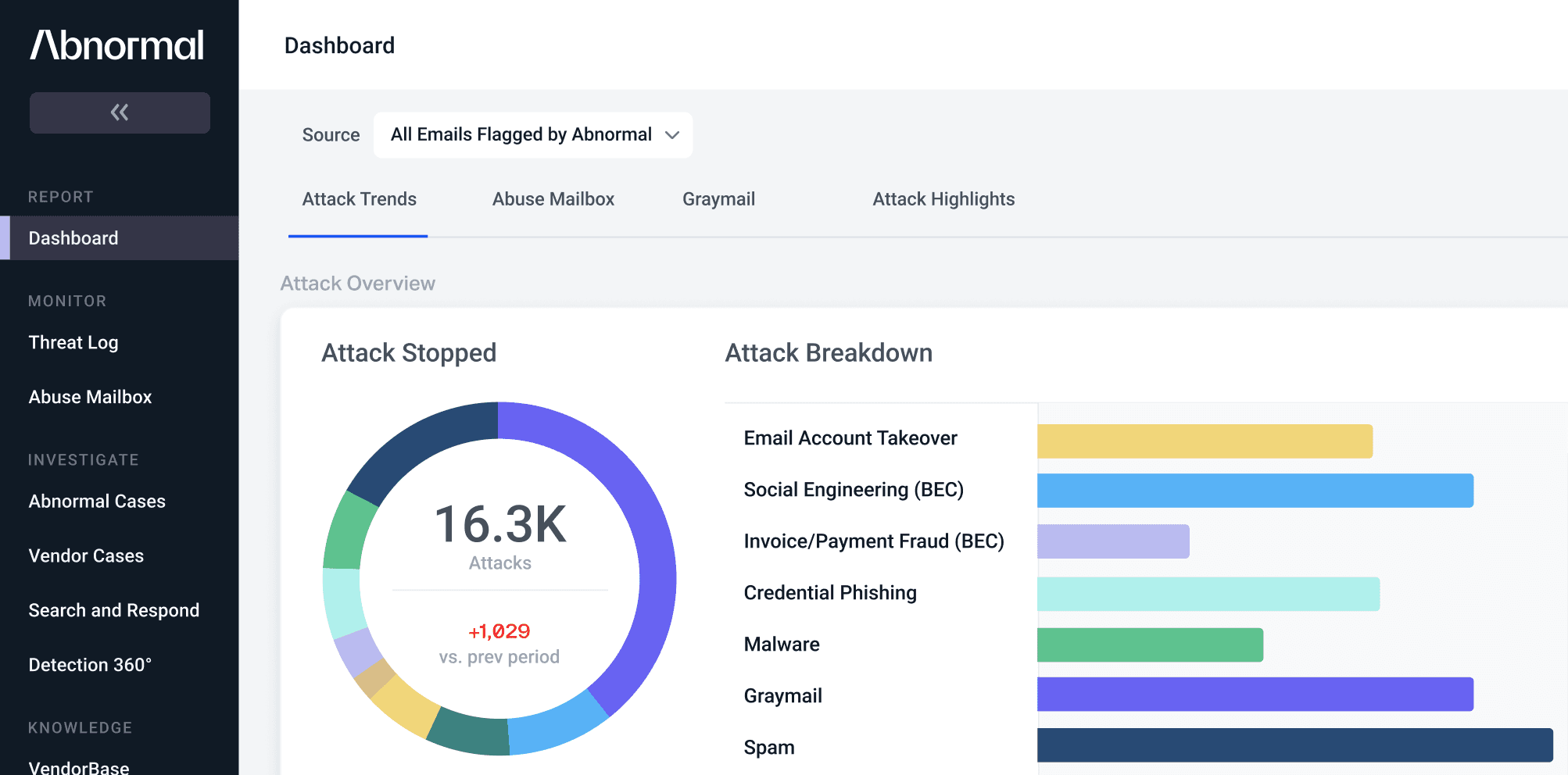

To discover more about how Abnormal can stop attacks that include convincing language and new text strings, request a demo of the platform today.

See the Abnormal Solution to the Email Security Problem

Protect your organization from the full spectrum of email attacks with Abnormal.